Cache thrashing occurs when values are repeatedly removed and re-fetched from the cache, leading to inefficiencies and degraded performance. This happens due to frequent cache evictions and reloads, which reduce the overall efficiency of the cache.

How It Happens:

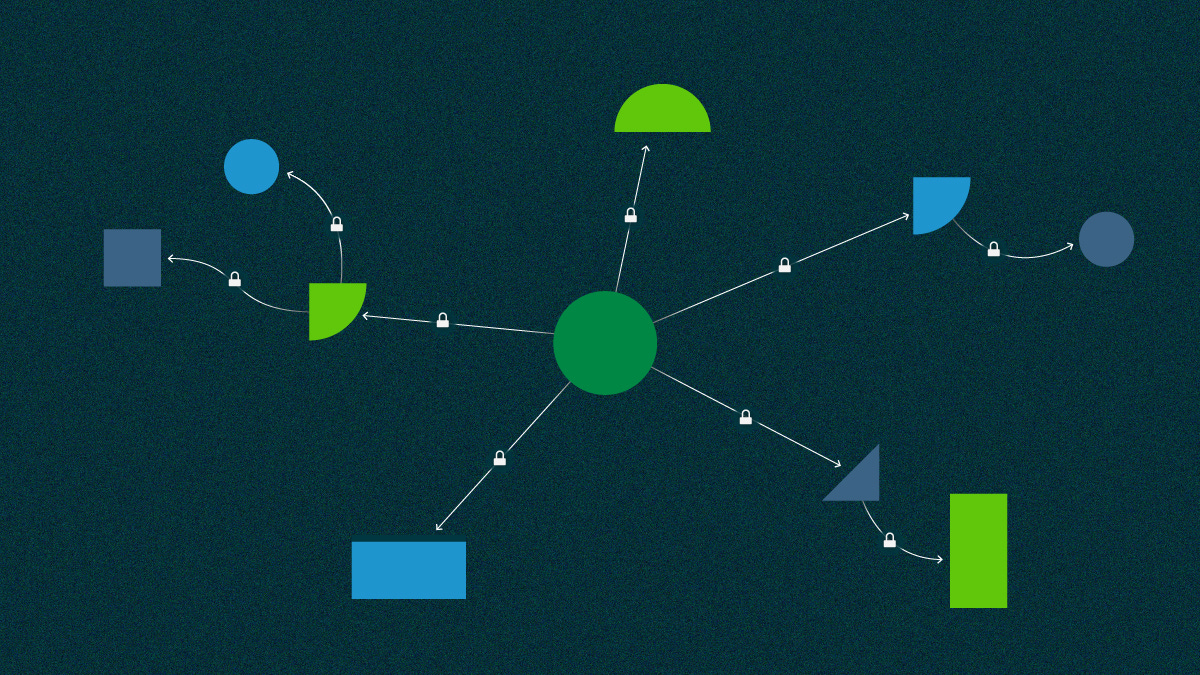

Frequent Access Patterns: Imagine a distributed system where multiple nodes frequently access certain values stored in the cache.

Cache Eviction: When the cache becomes full, it needs to evict some values to make space for new ones. If the eviction algorithm is not optimal for the access pattern, it might remove a value that will soon be requested again.

Re-Fetching Data: When a recently evicted value is requested again, it needs to be re-fetched from the underlying data store, causing additional overhead.

Back-and-Forth Motion: This cycle of evicting and re-fetching values leads to other values being evicted in turn, creating a continuous back-and-forth motion known as cache thrashing.

Example Scenario:

In a distributed movie booking system, let's say the system frequently accesses and updates the availability status of popular movies. If the cache is not properly managed, the availability status might be evicted and re-fetched repeatedly due to high demand, causing cache thrashing.

Causes of Cache Thrashing:

Full Cache: When the cache reaches its maximum capacity, it needs to evict values to store new ones.

Inappropriate Eviction Algorithm: The eviction policy may not be suited for the access pattern. For example, using a Least Recently Used (LRU) eviction policy in a scenario where the same few values are accessed frequently might not be optimal.

High Write Frequency: Frequent updates to cached values can lead to constant invalidations and reloads, exacerbating cache thrashing.

Impact on Distributed Systems:

Increased Latency: Frequent cache misses lead to additional network calls to fetch data from remote nodes or central databases, increasing response times.

High Network Traffic: The constant need to synchronize caches and fetch data increases network traffic, which can become a bottleneck.

Reduced Throughput: The overall throughput of the system decreases as more resources are spent handling cache misses and synchronizations.

Mitigation Strategies:

Adjust Eviction Algorithm: Use an eviction algorithm better suited for the access pattern, such as LFU (Least Frequently Used) if certain data is accessed more frequently.

Increase Cache Size: Expanding the cache size can reduce the frequency of evictions and re-fetches.

Data Partitioning: Partitioning data so that each node handles specific subsets can minimize cross-node cache invalidations and updates.

Load Balancing: Distributing the load evenly among nodes can prevent hotspots that lead to cache thrashing.

By understanding and addressing the causes of cache thrashing, distributed systems can be optimized to maintain high efficiency and performance.